AI x Future

On Wednesday 13rd of March 2019, the EA Rotterdam group had their seventh reading & discussion group. This is a deeper dive into some of the EA topics.

The topic for this event was AI x Future: Prosperity or destruction?

During the evening we discussed how artificial intelligence (AI) could lead to a wide range of possible futures.

Although we gave the instruction of thinking about only one side, of course all 3 groups also considered the opposite point of view from what they had to argue. Here are the questions, presentations, and my personal summary of the night.

We (the organisers of EA Rotterdam) thank Alex from V2_ (our venue for the night) for hosting us, and Jan (also from V2_) for being our AI expert for the evening.

If you want to visit an EA Rotterdam event, visit our Meetup page.

Questions

These were our starting questions:

Bright Lights

- What could AI do for you personally (if AI did/solved X, I could now Y)?

- What could be the effect of AI on energy production? Could AI help prevent/solve/reverse climate change?

- What effect could AI have if fully implemented in health research (protein folding, cancer research, Alzheimer’s)?

- Could AI help us produce the food we need with fewer resources (new crops, cultured meat, fewer pesticides, etc)?

- How would mobility change with self-driving cars, trucks, buses, planes?

- Could we prevent crime from happening (e.g. intelligent camera’s, prediction algorithms)?

- Can AI actually improve our privacy?

- Can we work together with AI to create more together (e.g. chess teams a few years back, a doctor working with AI image system)?

- Will AI make wars obsolete? Or when they happen, more humane?

- Could AI fight loneliness (e.g. robots in nursing homes)?

- Could AI help us learn and remember better (e.g. a ‘smarter’ Duolingo)?

- Will AI be conscious? If yes, could it ‘experience’ unlimited amounts of happiness?

Dark Despair

- Will there be any jobs for us to do in the (near/long-term) future? What can’t AI do?

- Who will enjoy the economic benefits from AI (Google/Facebook shareholders)? Will life become even more unequal than ever before in history?

- Could someone hack the autonomous cars of the future?

- Will we live in a totalitarian (China/Minority Report) state enabled by AI?

- Does AI mean the end of privacy (everything tracked and analysed)?

- Will AI enable more gruesome warfare (more weapons, no-one at the button)?

- Will we lose contact with each other / lose our humanity (e.g. robots in nursing homes, chatbots (in Japan))?

- What if the goals of the AI don’t align with our (humanity) goals? Can we still turn it off? How could we even align these goals – philosophy hasn’t really figured this one out yet!?

- Will AI be conscious? If no, will there still be humans to ‘experience’ the future?

Bonus:

- What are some concrete (future) examples of AI destroying the world?

- Can you think of some counter-arguments for the points you expect the other group to raise?

- Write down the date your group thinks AGI will happen? (don’t show the other team)

AI x Future

We started the evening with a presentation by Christiaan and Floris (me). In it, we explained both Effective Altruism (EA) and how (through this framework) we look at AI.

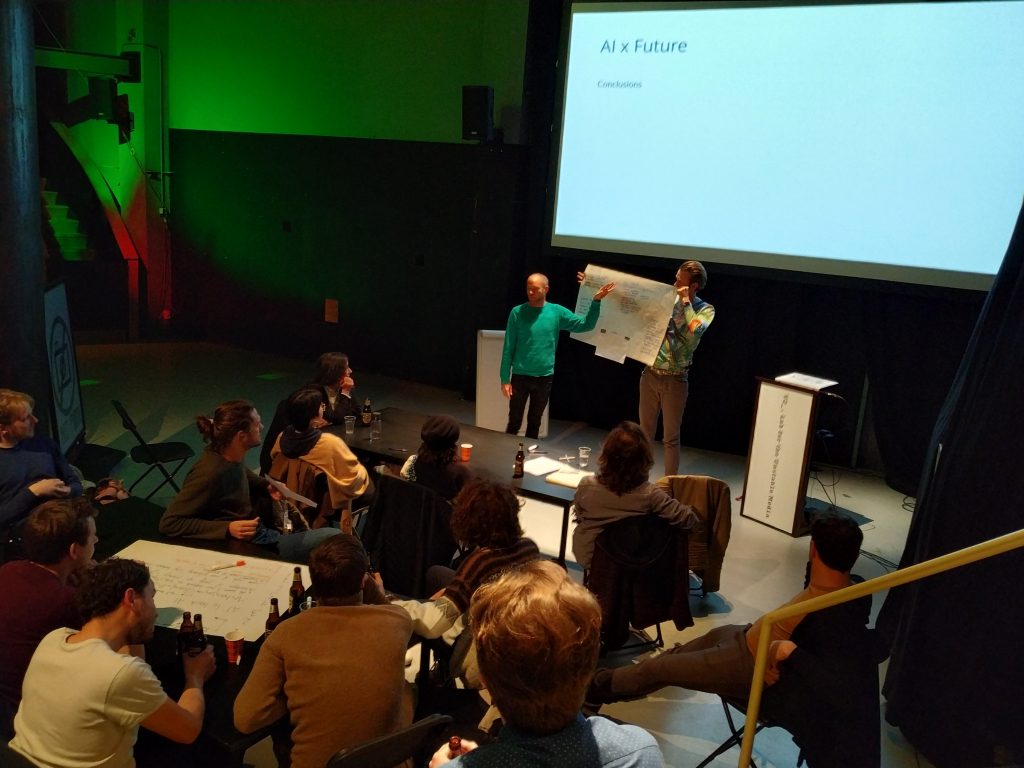

After that, we split the group into two and both groups worked on making a mindmap/overview of the questions asked above (download them). This is a summary of both sides:

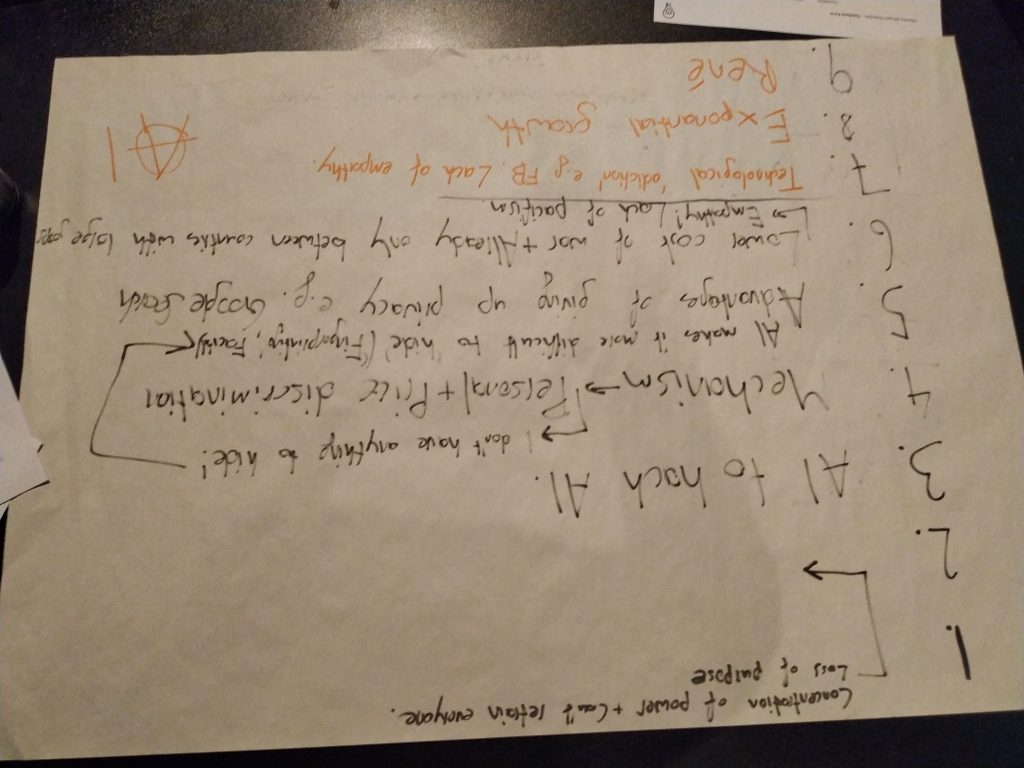

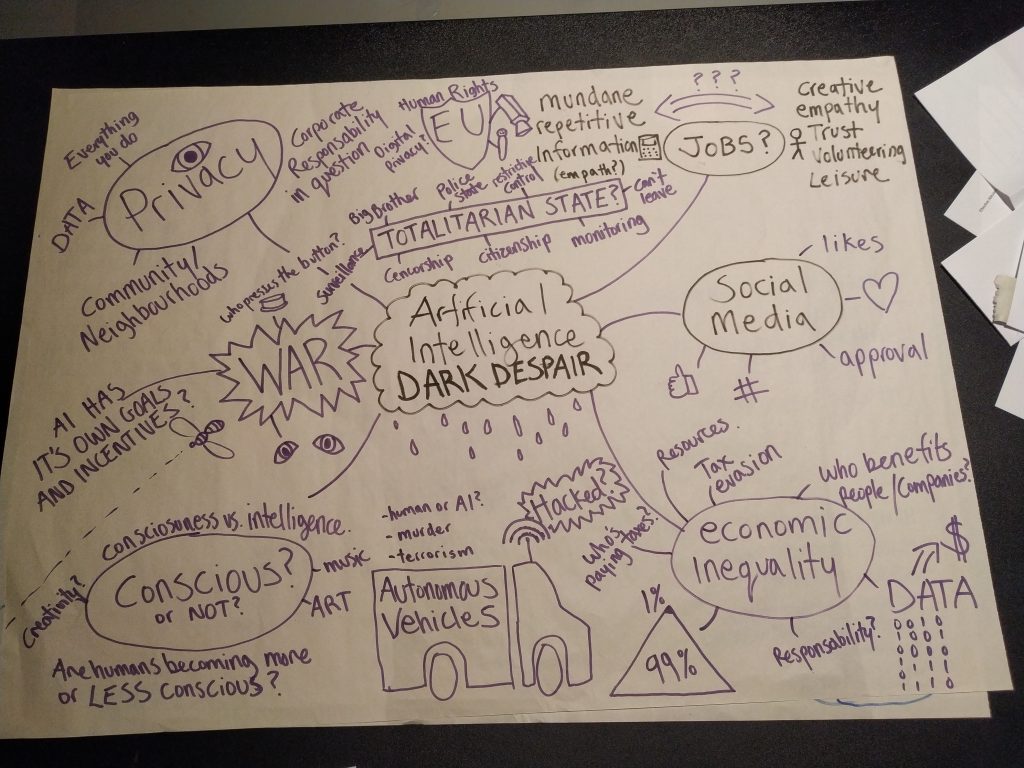

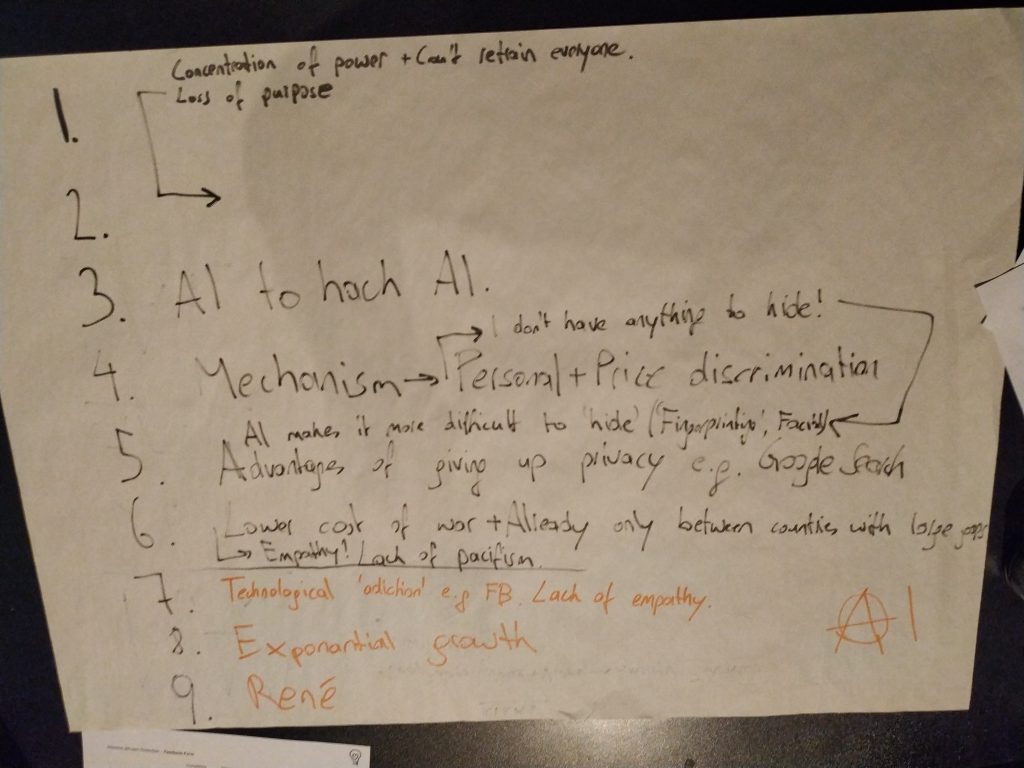

Group 1&2 – Dark Despair

These are the two posters from the Dark Despair groups:

Privacy

In a world with AGI, it could possibly track everything you do. The data that is is disparate systems could be combined and acted upon (not in your interest). Think 1984. You won’t be able to hide, your face will be detected.

Totalitarian State

This leads right into the second point of despair, a totalitarian state. One where big brother is always watching. The EU is making a case for privacy and human rights, can they withstand AGI?

Censorship, like that in China already this day, could lead to total control of the population. You might not be able to leave (e.g. if you’re social credits are too low).

War

Killer bees, but this time for real (or well, artificial, with tiny bombs). It is already real and warfare can become more dangerous and one-sided with an AGI on one side of the battle. Who presses the button? And are the goals of the AGI the same ones as ours?

And what if this makes war cheaper? Instead of training a soldier for years at millions of costs, just fly in some (small) drones that control themselves. Heck, what if they can repair themselves?

Will there be any empathy left in war? If you’re not there, why see the other side as a real human?

Jobs

Will there be any jobs left? A(G)I might leave us without mundane and repetitive tasks (a positive in most cases), but what about

And for who would we be working? Will it be to better humanity or for furthering the goals of the AGI (which might not align with ours).

Social Media

Looking closer at home, learning algorithms (ANI) are already influencing our lives and optimising our time on social networks, making us hunker for likes, hearts, approval. What if Facebook (social media), Amazon (buy this now, watch Twitch), Google (watch Youtube), Netflix, etc. become even better at this? Will we be the fat people from Wall-E?

Economic Inequality

And whilst we’re binge-watching some awesome new series, the AGI is hacking away at tax evasion (which some people are already good at, image the possibilities for an AGI).

Where will the benefits go? Do they go to society (like now via taxes and positive externalities) or will companies (and their executives) rake in all the benefits? With more and more data, who will benefit? How will the benefits ‘trickle-down’?

Autonomous Vehicles

In many US states,

Consciousness

Consciousness and intelligence don’t go hand in hand. Will AGI enjoy art, music, or anything at all? And (surprising to me), we asked, are humans becoming less conscious?

AGI vs AGI

What if you take the time to program the safety into your AGI, and then the other team (read: country) doesn’t and their AGI becomes more intelligent faster, but doesn’t share our goals? Guess who ‘wins’.

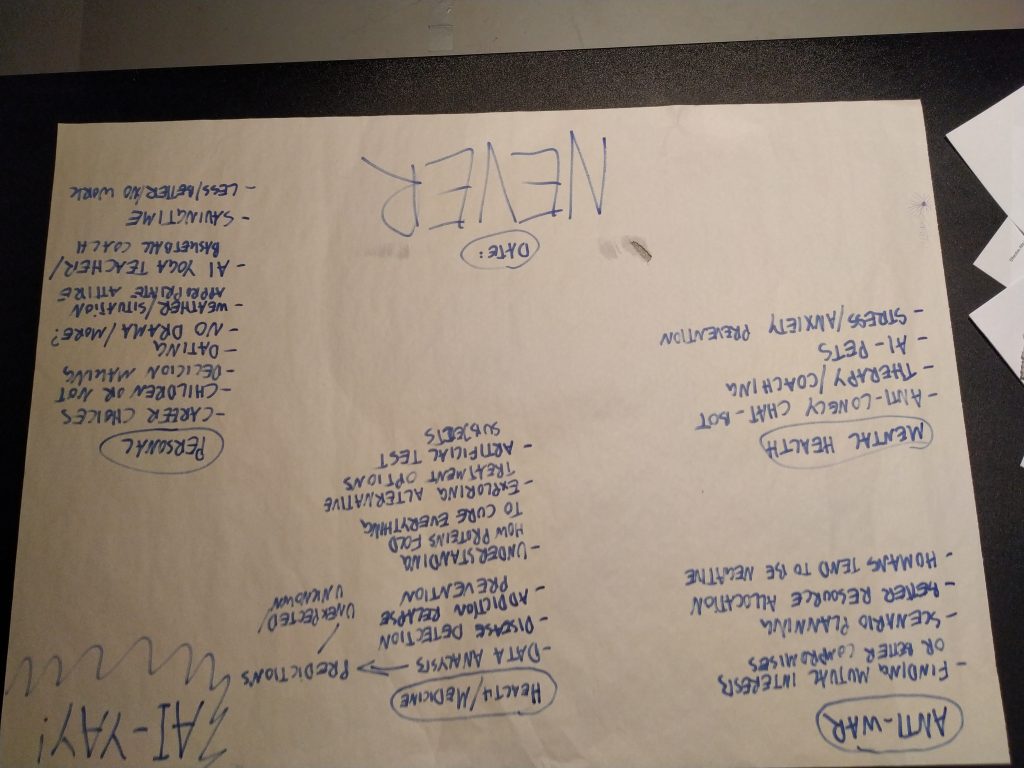

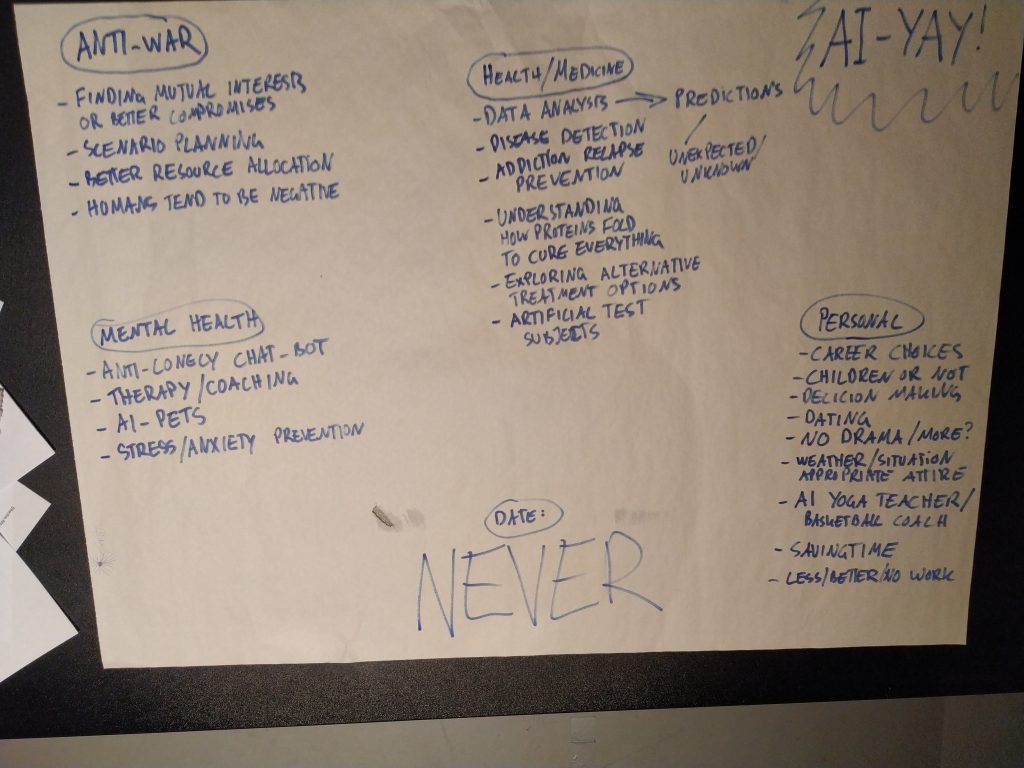

Group 3 – Bright Lights (AI-YAY!)

This is the poster from the Bright Lights group.

Anti-War

AGI could find better compromises and mutual interests. If you could plan scenarios better (show losses from war, have shorter wars, show benefits from cooperating, etc). We pesky humans tend to be quite negative, what if AGI could show us that war is not needed to achieve our goals?

War

Health/Medicine

With better data analysis, we can make better predictions and make better medicine. We can see the unpredicted/unknown and intervene before it’s too late.

We can help people who are addicted to prevent relapse. Data from cheap trackers could help someone stay clean. It could even detect bad patterns and help people before things get too bad.

If we understand how proteins fold (and we’re getting better at it, AlphaFold), we might cure every disease we know. The possibilities of AGI and health are endless and exciting.

Mental Health

A chat-bot could keep you company. If we have more old people (before we make them fit again), an AGI could be their companion.

Chat to your AGI and ditch the therapist. Get coaching and live your best life. Not only for people who have access to both right now. No, therapy and coaching for everyone in the world.

Personal

AGI could help you with making life decisions (think dating simulation in Black Mirror). Choose the very best career for your happiness/fulfilment. Should you have children? Dating, NO MORE DRAMA!

Have your AGI tell you the weather. Have it be your personal yoga teacher, your basketball coach. Let it take away boring work, save you time, and let you live your best life.

Conclusion

Two hours isn’t enough to tackle AI and our possible future. But I do hope that we’ve been able to inspire everyone who was there, and all you reading this, of what the possible future’s there are.

“May we live in interesting times” is a quote I find very appropriate for this topic. It can go many ways (and is doing that already). If, and when, we will have AGI, we will see. Until then, I hope to see you at our next Meetup.

Resources

Videos

Nick Bostrom – What happens when our computers get smarter than we are?

Max Tegmark – How to get empowered, not overpowered, by AI

Grady Booch – Don’t fear superintelligent AI

Shyam Sankar – The rise of human-computer cooperation

Anthony Goldboom – The jobs we’ll lose to machines — and the ones we won’t (4 min)

Two Minute Papers – How Does Deep Learning Work?

Crash Course – Machine Learning & Artificial Intelligence

Computerphile – Artificial Intelligence with Rob Miles (13 episodes)

Books

Superintelligence – Nick Bostrom (examining the risks)

Life 3.0 – Max Tegmark (optimistic)

The Master Algorithm – Pedro Domingos (explanation of learning algorithms)

The Singularity Is Near – Ray Kurzweil (very optimistic)

Humans Need Not Apply – Jerry Kaplan (good intro, conversational)

Our Final Invention – James Barrat (negative effects)

Isaac Asimov’s Robot Series (fiction 1940-1950, loads of fun!)

TV Shows

Person of Interest (good considerations)

Black Mirror (episodic, dark side of technology)

Westworld (AI as humanoid robots)

Movies

Ex Machina (AI as humanoid robot)

Blade Runner (cult classic, who/what is humam?)

Eagle Eye (omnipresent AI system)

Her (AI and human connection)

2001: A Space Odyssey (1986, AI ship computer)

Research/Articles

Effective Altruism Foundation on Artificial Intelligence Opportunities and Risks

https://80000hours.org/problem-profiles/positively-shaping-artificial-intelligence/

80000 hours Problem Profile of Artificial Intelligence

https://80000hours.org/topic/priority-paths/ai-policy/

80000 hours on AI policy (also has great podcasts)

Great, and long-but-worth-it, article on The AI Revolution

Future Perfect (Vox) article on AI safety alignment

https://cs.nyu.edu/faculty/davise/papers/Bostrom.pdf

Ernest Davis on Ethical Guidelines for a Superintelligence

https://intelligence.org/files/PredictingAI.pdf

On how we’re bad at prediction when AGI will happen

https://intelligence.org/files/ResponsesAGIRisk.pdf

Responses to Catastrophic AGI Risk

https://kk.org/thetechnium/thinkism/

Kevin Kelly on Thinkism, why the Singularity (/AGI) won’t happen soon

dhttps://deepmind.com/blog/alphafold/

Deep Mind (Google/Alphabet) on Alphafold (protein folding)

Meetup:

Awesome newsletter (recommended by an attendee):

http://www.exponentialview.co/

Download the full resource list